Projects

Democratizing Uncertainty Quantification

Background

Uncertainty quantification (UQ) for advanced mathematical models can offer valuable insights in scientific projects.

However, widespread adoption of advanced UQ methods is held back by technical issues when integrating UQ and numerical models. In addition, there is a lack of reproducible benchmark problems, which are needed to drive future UQ development.

Contribution

I started the UM-Bridge project that facilitates straightforward application of numerous advanced UQ codes to any numerical simulation tool. UM-Bridge enables scaling up UQ applications to cloud and supercomputers, and is the technical foundation for the first library of UQ benchmark problems.

Outcome

UM-Bridge has facilitated numerous challenging academic UQ applications, ranging from prototypes to large-scale cloud computing. Early industry adoption is in progress.

Participants across more than 10 international institutions are contributing to the UM-Bridge benchmark library.

- Google Open Source Peer Bonus Award (link)

- SIAM News article: Lowering the Entry Barrier to Uncertainty Quantification

A. Reinarz, L. Seelinger

SIAM News, Oct. 2023, (link) - UM-Bridge enabling UQ at digiLab Solutions (digiLab academy blog post)

- Blog post: Enabling Complex Scientific Applications

A. Reinarz, L. Seelinger

Better Scientific Software (link) - UM-Bridge: Uncertainty Quantification and Modeling Bridge

L. Seelinger, V. Cheng-Seelinger, A. Davis, M. Parno, A. Reinarz

Journal of Open Source Software (link)

Uncertainty Quantification for Large-Scale Models

Background

Uncertainties in data, for example due to measurement errors, are ubiquitous. Quantifying their effect on model predictions and inferences is a major challenge, with simple approaches often necessitating excessive numbers of simulation runs.

Contribution

The key contribution of my PhD project is a massively parallelized version of Multilevel Markov chain Monte Carlo (MLMCMC). It was accepted into the MIT Uncertainty Quantification library.

Outcome

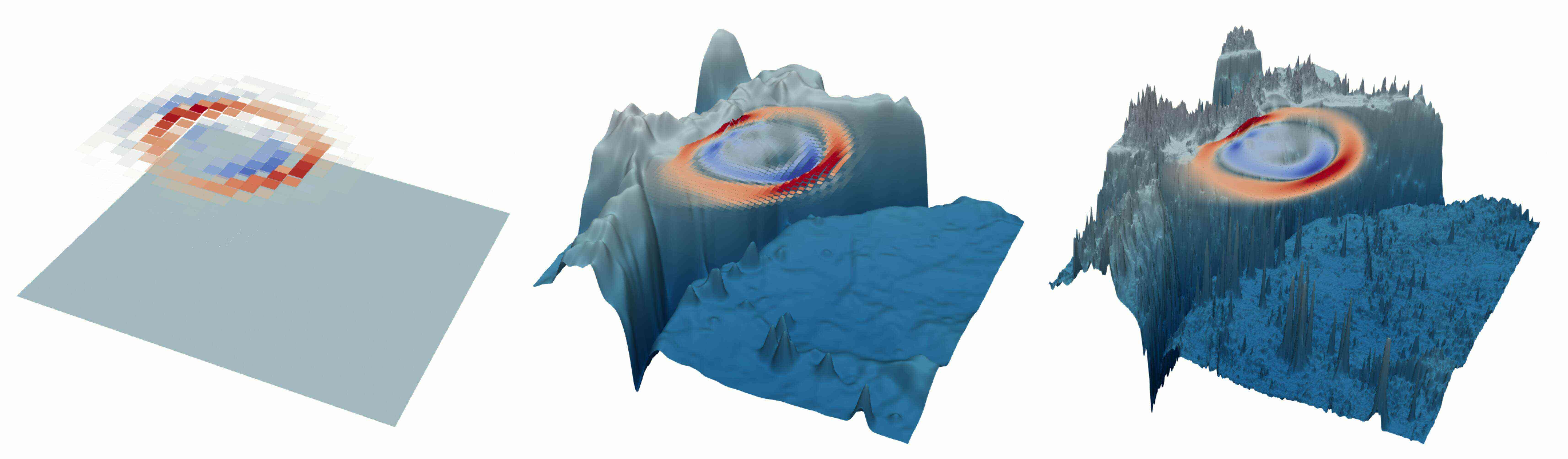

Using this method, we could solve a Bayesian inference problem on a challenging Tsunami model on ~3,500 processor cores of SuperMUC-NG at Leibniz supercomputing center.

- High Performance Uncertainty Quantification with Parallelized Multilevel Markov Chain Monte Carlo

L. Seelinger, A. Reinarz, L. Rannabauer, M. Bader, P. Bastian, R. Scheichl

The International Conference for High Performance Computing, Networking, Storage, and Analysis 2021 (link) (preprint) - MUQ: The MIT Uncertainty Quantification Library

M. Parno, A. Davis, L. Seelinger

Journal of Open Source Software (link)

Scalable Solver for Multiscale PDE Models

Background

Mathematical models are hard to solve numerically when multiple physical scales interact.

This is the case with carbon fiber composites, limiting industry standard solvers to relatively small scale simulations.

Contribution

As part of CerTest, I developed the first highly scalable implementation of GenEO. This mathematical method automatically identifies and eliminates critical components of the solution space, thus retaining the solver’s efficiency.

My GenEO implementation can be applied to a large class of PDE problems and was accepted into the DUNE numerics framework.

Outcome

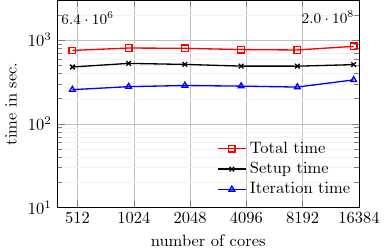

We could successfully simulate large composite aircraft structures on up to ~16,000 processor cores of the ARCHER UK national supercomputer, exceeding industry standard by a factor of ~1000.

- Multilevel Spectral Domain Decomposition

P. Bastian, R. Scheichl, L. Seelinger, A. Strehlow

SIAM Journal on Scientific Computing (link) (preprint) - A High-Performance Implementation of a Robust Preconditioner for Heterogeneous Problems

L. Seelinger, A. Reinarz, R. Scheichl

Parallel Processing and Applied Mathematics, LNCS (link) (preprint) - High-performance dune modules for solving large-scale, strongly anisotropic elliptic problems with applications to aerospace composites

R. Butler, T. Dodwell, A. Reinarz, A. Sandhu, R. Scheichl, L. Seelinger

Computer Physics Communications vol. 249 (link) (preprint) - Dune-composites – A new framework for high-performance finite element modelling of laminates

A. Reinarz, T. Dodwell, T. Fletcher, L. Seelinger, R. Butler, R. Scheichl

Composite Structures (link) (preprint)